Integrity in AI use at the University of Arizona - What Do Our Students and Faculty Think?

This post is a summary of initial findings from an ongoing survey among students and faculty on the ethics of AI use in the classroom at the University of Arizona. This blog is the first in a series meant to share our findings with you.

Perceptions of Ethical Implications of Using AI in the Classroom

Generative AI is everywhere: including in your classroom. Since its mass debut in late 2022, its use has brought forth important questions about policies, integrity, learning, technology, and teaching.

Because of this, many faculty are wrestling with questions about how to best support classroom use of AI: they want to embrace the future and expose their students to this technology, but also want to see evidence of their students’ critical thinking and educational evolution over a semester.

The question becomes, then, how do we allow students to leverage innovations in technology in a way that still centers their own thinking and knowledge-building?

In order to begin exploring these ideas, we wanted to gather information about where we are currently: what the thoughts, attitudes, and applications are about the integrity of using AI in the classroom.

AI Working Groups at the University of Arizona

In the Summer of 2023, we participated in the University’s AI Working Groups initiative. Our group focused on Integrity in AI, with a sub-group of us focusing specifically on creating a survey to begin to explore how faculty and students view the ethical implications of using AI in the classroom: both for teaching and learning.

In the following months, we developed two brief surveys, one for faculty and one for students, and we received IRB approval.

Background and Context

Artificial Intelligence (AI) encompasses many technologies, but our focus is on generative AI: applications that create text, code, images, audio, and video that can be mistaken for human-made content. Examples include ChatGPT, which generates text, and DALL-E, which creates images from text prompts.

The Importance of Ethics and Integrity in AI Use

In a university setting, ethics and integrity directly impact academic honesty: presenting your original work, giving credit to others for their contributions, and avoiding plagiarism, cheating, and fabricating data or information. In our study, we conceptualized these terms in the following ways:

- Ethics pertains to the moral standards that both students and faculty are expected to uphold in their academic and professional pursuits.

- Integrity centers around consistently acting in alignment with a set of values and beliefs, even when no one is watching.

- Integrity is a subset of ethics, representing the personal commitment to ethical behavior.

Our Research Project

In this context, we wanted to learn more about how faculty and students view integrity in AI when it comes to how it can potentially be used in education: specifically, as students use it in their learning (both inside and outside of the classroom) and as a tool for faculty to design their courses.

To this end, the survey asks faculty and students questions that address:

- How familiar they are with generative AI technologies

- In what ways they have used generative AI

- How AI has, does, or will influence the dynamics of their teaching/coursework

- Examples of integrity when using generative AI

- Whether they think AI can be used ethically in the classroom

- What their main concerns are about using generative AI

We are currently in the process of surveying both students and faculty, who each get a similar set of questions tailored to their role.

Select Initial Findings

While our survey is still ongoing, we wanted to share a few initial findings. Our preliminary data is based on 673* responses from faculty, graduate students, and undergraduate students.

The data used here was collected during the Spring 2023, Summer 2023, and Fall 2024 semesters.

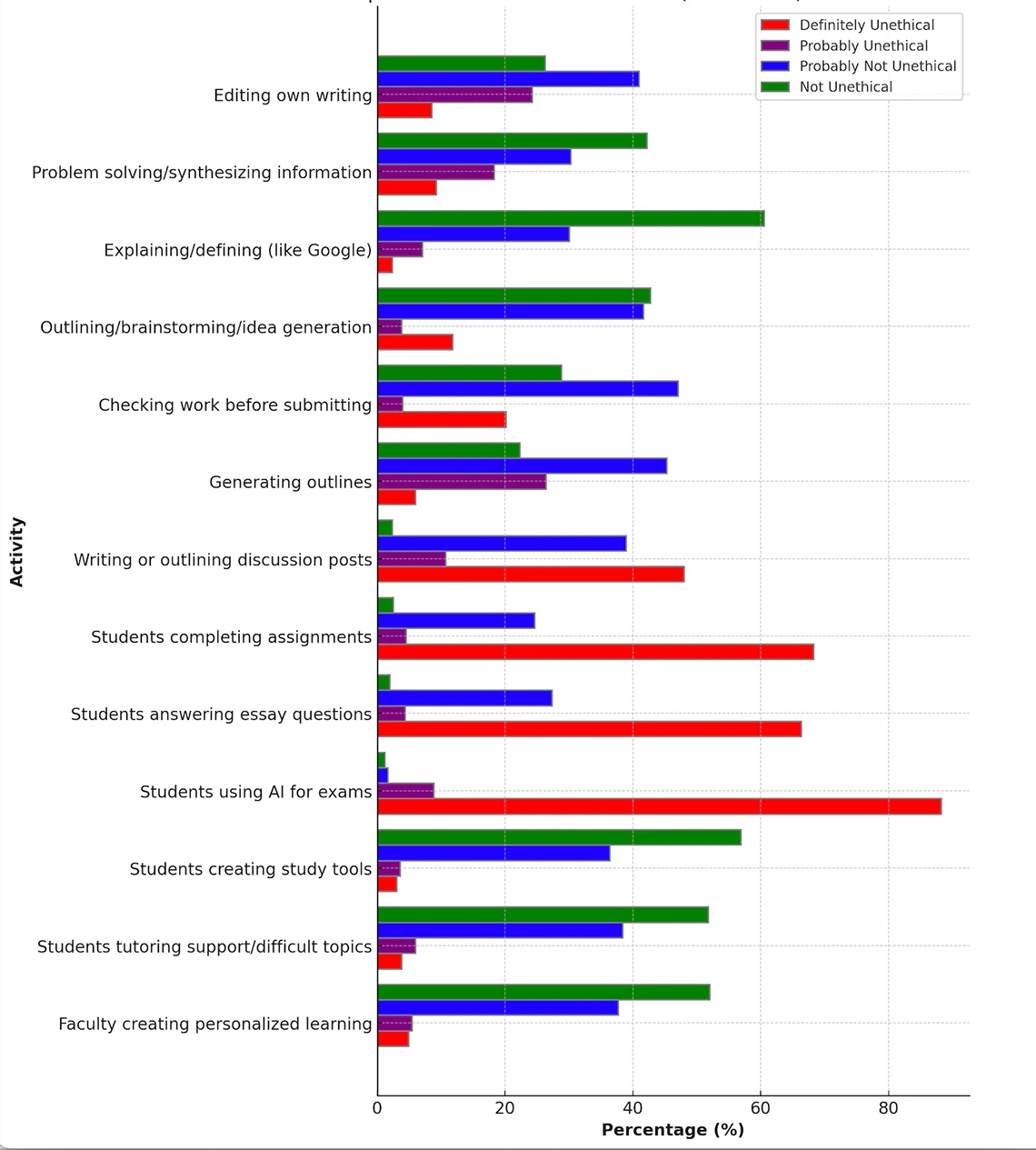

What Students Are Saying about Ethical Uses of AI

Students were asked to rank how ethical or unethical AI-related activities were in their classroom from a list of common classroom tasks. Ranking options were: Definitely Unethical; Probably Unethical; Probably Not Unethical (i.e., Probably Fine to Do); Definitely Not Unethical (i.e., Totally Fine to Do).

Of the 581 students responded, 559 were undergraduates and 22 were graduate students.

AI-Supported Activities Rated As the Most Ethical by Students

Several AI-related activities were viewed as ethical by the majority of students. These activities were:

- Using AI Like a Search Engine (i.e., Google): Most students considered using AI as a search engine to gather information and explain/define terms to be an ethical use of the tool.

- Creating Your Own Personalized and Adaptive Learning Support: Students generally considered using AI to create their own support for learning to be ethical.

- Personalized Tutoring to Help Understand Difficult Topics: Using AI to support their learning was widely viewed as ethical among student respondents.

- Creating Study Tools: Using AI to help them study was also seen as ethical by the majority of student respondents who consider this a legitimate way to support their learning process.

AI-Supported Activities Ranked As the Least Ethical by Students

Conversely, some AI uses were overwhelmingly viewed as unethical, particularly those that directly involve AI generating content for students to turn in. These activities were:

- Taking Exams with AI Assistance: This activity was rated the most unethical, with a significant majority of students considering it a form of cheating.

- Answering Essay Questions with AI: Similar to using AI during exams, using AI to answer essay questions was also considered highly unethical.

- Completing Assignments with AI: AI assistance in completing assignments was also largely viewed as unethical by student respondents. This includes outlining and writing discussion posts.

Overall, the sentiment among student participants was that tasks related to supporting and supplementing their learning were ethical, while activities that leveraged AI to complete higher-stakes work are unethical.

We also gave students the opportunity to provide open-ended responses about how AI can be used in the classroom in a way that supports academic integrity. Here are select responses that provide context to the above findings, as well as give some insight into how students juxtapose AI generated content with their academic activities:

“I think using it as a resource or to help guide you is good, but not having it actually do the assignment for you. It's good to provide resources, try and find ideas or inspiration, and give you a different perspective if needed.”

“It should only be used to reinforce what you know or are struggling to learn. It shouldn't be used to do all the work for you, because it will not be beneficial in the long run.”

“Learn how to use it, but understand that they [students] need to have knowledge in certain things and they cannot expect AI to save them every time. I take a lot of pride in my ability to do things myself.”

AI-Supported Activities Ranked by Students

A bar graph showing various academic activities on the y-axis and percentages on the x-axis, indicating students’ perceptions of the ethicality of using AI for each activity. The graph reveals that students view using AI for exams as the most unethical activity (predominantly red).

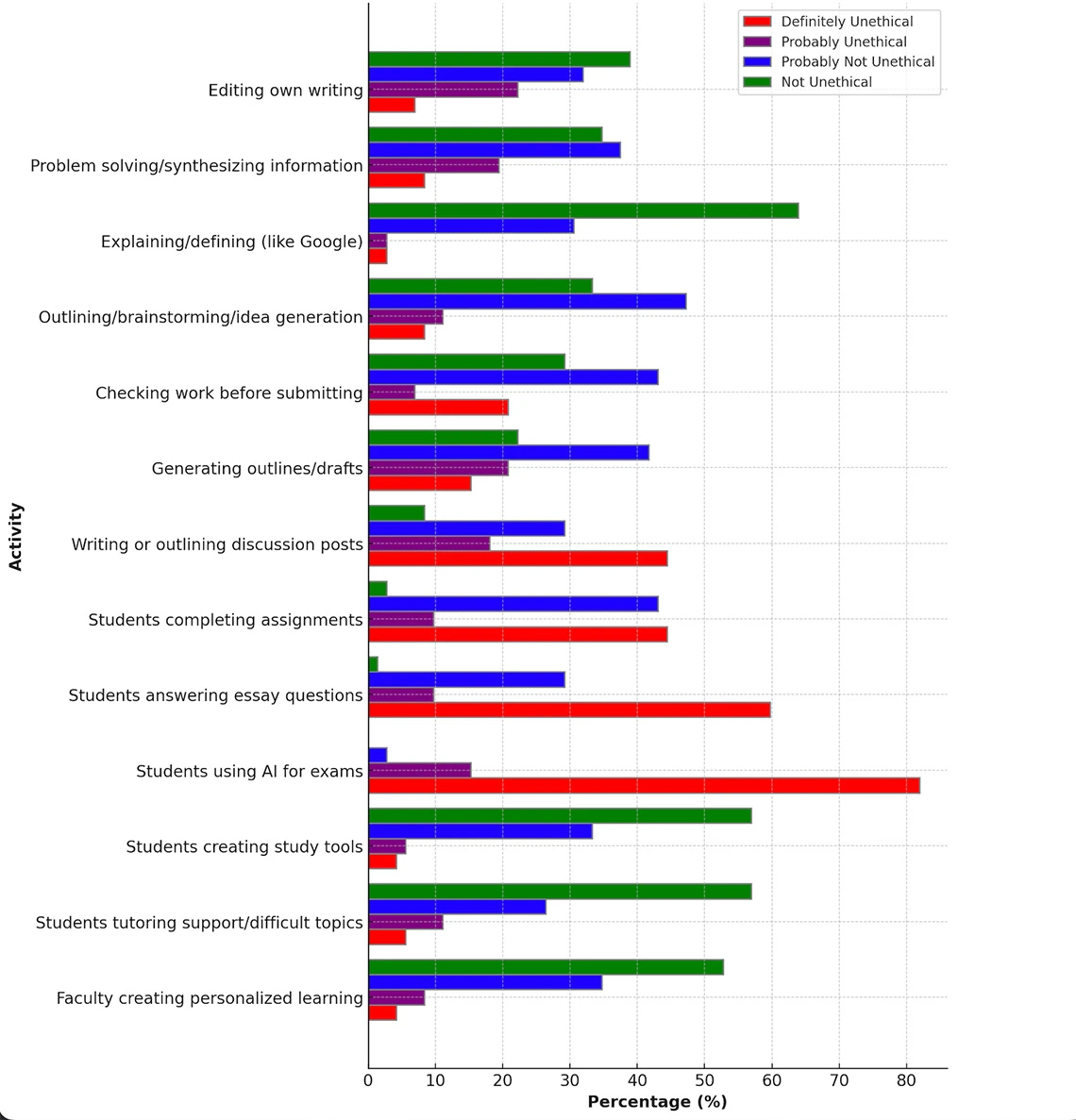

What Faculty Are Saying about Ethical Uses of AI

Faculty were also asked to rank the ethics of AI-related activities in their classroom from a list of common classroom tasks. The same ranking options were given: Definitely Unethical; Probably Unethical; Probably Not Unethical (i.e., Probably Fine to Do); Definitely Not Unethical (i.e., Totally Fine to Do).

A total of 81 faculty responded.

AI-Supported Activities Ranked As the Most Ethical by Faculty

The activities that were rated as the most ethical by faculty respondents included:

- Faculty Use of AI Tools to Create Personalized and Adaptive Learning for Students: Most faculty agreed that leveraging AI to help them create activities and generate ideas to support their students’ learning was an ethical use of AI.

- Students Using AI Tools for Self-Editing and Improvement: Similarly, faculty responded that allowing students to use tools to edit their own writing was generally seen as ethical.

- Students Seeking Personalized Tutoring Support through AI: Faculty also felt student use of AI tools to supplement their learning or to help them in understanding more difficult topics was ethical.

- Using AI Like a Search Engine (i.e., Google): Faculty also generally considered using AI to gather information and explain/define terms to be an ethical use of the tool.

AI-Supported Activities Ranked As the Least Ethical by Faculty

On the other end of the spectrum, several activities were overwhelmingly viewed by faculty as unethical, particularly those that AI completing work that students would turn in. These were:

- Students Using AI Tools to Take Exams: This activity was rated the most unethical, with a significant majority of respondents viewing it as definitely unethical.

- Students Using AI Tools to Answer Essay Questions: Similar to the above, the use of technology to generate or assist in answering essay questions was also considered highly unethical.

- Students Using AI Tools to Complete Assignments: Rounding out the list of unethical activities, students using tools to complete their assignments, including prepping or writing discussion posts, was also viewed as unethical.

Overall, faculty responded that the use of AI to supplement students’ learning was ethical, while using it to complete work or exams was unethical.

To give context to their responses, we also asked faculty what their main concerns were about using generative AI or having their students use generative AI in their course. Here are a few of their responses:

“I don't want students to use it as a crutch without learning the basic tools of proper communication, researching and problem solving. Students need to learn the fundamentals and then use AI as an additional resource.”

“Not learning writing skills that they will need to get jobs and change the world.”

“My main concern is that students will lose the ability to develop novel work on their own if they never have to practice doing it. This could reduce innovative thinking in the longer term.”

AI-Supported Activities Ranked by Faculty

A bar graph showing various academic activities on the y-axis and percentages on the x-axis, indicating faculty perceptions of the ethicality of using AI for each activity. The graph shows that using AI for exams is viewed as the most unethical activity, while explaining/defining information and creating study tools are perceived as the least unethical.

Discussion

In reviewing this data, one of the most compelling findings is that, generally, student and faculty respondents seemed to agree on the most and least ethical uses of AI in the classroom. Overall, this indicates that AI can be a valuable learning tool when used as a supplement for learning and instruction, but should not replace the student's own critical thinking, understanding, and effort.

While this data may not come as a surprise, it’s important to be asking these questions and considering student and faculty perspectives.

Additionally, these responses provide some insight into potential ways to continue to improve teaching models, expand modes of learning, and support students in a way that is manageable for faculty while allowing students to develop strategies to guide their own learning. AI offers a way to help students better understand complex subjects, get preliminary feedback on their original work, and use technology to support their learning.

Next Steps

We know that faculty and students (as well as staff and administrators) have a lot on their minds about teaching and learning with AI - and that this is nowhere near the end of this discussion. To that end, we plan to analyze and continue to share our results over the coming months. This includes additional results that address the “grey areas” we intentionally omitted from this initial discussion: classroom activities that are less clear cut, when it comes to whether or not students and faculty find them ethical or not.

The survey will continue to run during the Fall 2024 semester. If you are interested in helping us understand how faculty and/or students view the use of AI in the classroom, please consider taking and/or sharing our survey.

With the dramatic rise of Generative AI technology (like ChatGPT and Google Bard), we’re interested in your opinions on its ethical use in higher education. Your participation in this brief survey would be appreciated: it should take you less than 10 minutes to complete and will give us valuable insights into the University community’s views on the ethics implications of Generative AI in the classroom.

Disclaimer: ChatGPT helped to summarize the preliminary findings from the survey and create charts.

*Data exported on September 11, 2024.